Kategorie: ‘Virtual Reality’

Paper published: Heard-text recall and listening effort under irrelevant speech and pseudo-speech in virtual reality

We are happy to announce that our paper „Heard-text recall and listening effort under irrelevant speech and pseudo-speech in virtual reality“ was published this week.

The paper by Cosima A. Ermert, , , and

Using the Heard-Text-Recall (HTR) paradigm in a dual-task design, this paper evaluates the relevance of semantic meaningful background speech compared to pseudo speech in virtual reality (VR). The results highlight the relevance of semantic content both for memory of spoken content and behavioural listening effort.

Read the paper at Acta Acustica.

Einladung zur Abschlussveranstaltung des BaLSaM-Projekts am 21.1.2026

Das Projekt “Braunkohlereviere als attraktive Lebensräume durch Straßengeräuschsimulation auf Basis bestehender Verkehrsdaten zur Minimierung von Lärm” (BaLSaM) lädt Interessierte zur öffentlichen Abschlussveranstaltung ein. Sie wird am 21.1.2026 von 8:30-11:30 Uhr im Institut für Hörtechnik und Akustik (IHTA) der RWTH Aachen University stattfinden (Adresse: Kopernikusstraße 5, 52074 Aachen, Deutschland).

Im Rahmen des BaLSaM-Projekts wurde die Modellierung der Schallausbreitung von Verkehrslärm in urbanen Umgebungen sowie deren Auswirkung auf die menschliche Wahrnehmung untersucht. Das BaLSAM-Konsortium setzt sich zusammen aus dem Institut für Kraftfahrzeuge (IKA) und dem Institut für Hörtechnik und Akustik (IHTA) der RWTH Aachen University sowie RHA REICHER HAASE ASSOZIIERTE GmbH und HEAD acoustics GmbH. Gefördert wird das Projekt von dem Bundesministerium für Verkehr im Rahmen der mFUND-Initiative.

Während der Abschlussveranstaltung werden die Projektpartner die Ergebnisse des Projekts zu präsentieren. Darüber hinaus wird das Event die Gelegenheit bieten, Erfahrungen auszutauschen und gemeinsam über zukünftige Fragestellungen zu diskutieren. Neben der Teilnahme vor Ort ist auch eine digitale Teilnahme an der Veranstaltung möglich.

Agenda:

| 08:30 | Anreise |

| 09:00 | Begrüßung |

| 09:15 | Vorstellung des Projektes |

| 09:30 | Vorstellung der Ergebnisse aus den Arbeitspaketen |

| 11:00 | Diskussion mit dem Teilnehmerkreis |

| 11:15 | Beginn der Demo & Ausklang des öffentlichen Teils |

| Im Anschluss: Projekt-interner Abschluss (nicht öffentlich) | |

— English version —

The BaLSaM project invites interested parties to the public closing event. It will take place on January 21, 2026, from 8:30 a.m. to 11:30 a.m. at the Institute for Hearing Technology and Acoustics (IHTA) at RWTH Aachen University (address: Kopernikusstraße 5, 52074 Aachen, Germany).

The BaLSaM project investigated the modeling of traffic noise propagation in urban environments and its impact on human perception. The BaLSAM consortium consists of the Institute for Automotive Engineering (IKA) and the Institute for Hearing Technology and Acoustics (IHTA) at RWTH Aachen University, as well as RHA REICHER HAASE ASSOZIIERTE GmbH and HEAD acoustics GmbH. The project is funded by the German Federal Ministry of Transport as part of the mFUND initiative.

During the closing event, the project partners will present the results of the project. In addition, the event will provide the opportunity to exchange experiences and discuss future topics. In addition to attending in person, digital participation in the event is also possible.

—————

Anmeldung / Registration:

https://www.balsam-projekt.de/de/abschlussveranstaltung.html

Digitale Teilnahme per Teams / Online participation via Teams:

Jetzt an der Besprechung teilnehmen

Besprechungs-ID: 317 428 128 635 50

Passcode: zg6pV6Yw

Paper published: Exploring auditory selective attention shifts in virtual reality: An approach with matrix sentences

We are happy to share that our paper “Exploring auditory selective attention shifts in virtual reality: An approach with matrix sentences” has been published in the Journal of the Acoustical Society of America:

https://doi.org/10.1121/10.0039864

In this study, we explored voluntary shifts of auditory selective attention in complex and more naturalistic acoustic environments. To move beyond earlier paradigms based on single-word stimuli, we introduced unpredictable German matrix sentences to simulate more realistic listening conditions.

Overall results were comparable to previous versions, but no strong reorienting effect emerged. Interaction patterns still indicate that shifting auditory attention is more demanding than maintaining it, and that preparing attention benefits performance, as reflected in decreasing reaction times for later target onsets.

This approach contributes a paradigm for investigating auditory perception and attention in dynamic room acoustic environments, helping to close the gap between laboratory setups and real-world listening.

This work was created by Carolin Breuer and Janina Fels and was funded by the German Research Foundation (DFG) as part of the Priority Program SPP2236 AUDICTIVE.

Paper Published: Exploring cross-modal perception in a virtual classroom: the effect of visual stimuli on auditory selective attention

A new paper “Exploring cross-modal perception in a virtual classroom: the effect of visual stimuli on auditory selective attention” has been published in Frontiers in Psychology, as part of the Research Topic “Crossing Sensory Boundaries: Multisensory Perception Through the Lens of Audition”: https://doi.org/10.3389/fpsyg.2025.1512851

In a virtual classroom environment, we investigated how visual stimuli influence auditory selective attention. Across two experiments, congruent and incongruent pictures modulated performance during an auditory attention task: concurrent visual input increased response times overall, and incongruent pictures led to more errors than congruent ones. When visual stimuli preceded the sounds, the timing mattered — positive priming at 500 ms, but semantic inhibition of return at 750 ms.

These results highlight that cross-modal priming differs from multisensory integration, and that temporal dynamics between modalities substantially shape attentional behaviour.

This work was a collaboration between Carolin Breuer, Lukas Jonathan Vollmer, Larissa Leist, Stephan Fremerey, Alexander Raake, Maria Klatte and Janina Fels.

It was funded by the Priority Programme SPP2236 AUDICITVE and the Research Training Group (RTG) 2416 – MultiSenses, MultiScales, both of which are funded by the German Research Foundation (DFG).

Many thanks to everyone involved.

Paper published: The influence of complex classroom noise on auditory selective attention

We are very glad to share that our paper “The influence of complex classroom noise on auditory selective attention”, based on the bachelor’s thesis of Robert Schmitt, which we co-authored, has just been published in Scientific Reports: https://www.nature.com/articles/s41598-025-18232-2

It has been a real pleasure to supervise this work and to see it evolve into a full publication. In the study, we examined how plausible classroom noise affects auditory selective attention in a virtual reality classroom environment.

Our results underline the importance of studying realistic and complex acoustic scenarios to gain more reliable and valid insights into auditory perception by showing higher error rates in the auditory attention task under complex noise conditions, as well as an increased perceived listening effort when the background contained intelligible speech.

The paper was developed within the ECoClass-VR project, part of the DFG Priority Program SPP 2236 AUDICTIVE on Auditory Cognition in Interactive Virtual Environments. More information at www.spp2236-audictive.de.

We hope these findings contribute to advancing our understanding of auditory attention in complex, real-world listening situations.

Many thanks to the co-authors Robert Schmitt, Larissa Leist, Stephan Fremerey, Alexander Raake, Maria Klatte, and Janina Fels, and to the AUDICTIVE community for the inspiring collaboration and support.

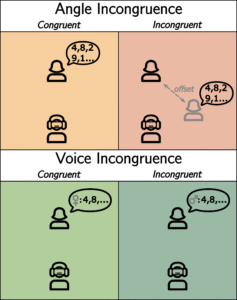

Paper published: Audiovisual angle and voice incongruence do not affect audiovisual verbal short-term memory in virtual reality

We are happy to announce that our paper Audiovisual angle and voice incongruence do not affect audiovisual verbal short-term memory in virtual reality by Cosima A. Ermert, Manuj Yadav, Jonathan Ehret, Chinthusa Mohanathasan, Andrea Bönsch, Torsten W. Kuhlen, Sabine J. Schlittmeier, and Janina Fels has just been published in PLOS ONE.

We are happy to announce that our paper Audiovisual angle and voice incongruence do not affect audiovisual verbal short-term memory in virtual reality by Cosima A. Ermert, Manuj Yadav, Jonathan Ehret, Chinthusa Mohanathasan, Andrea Bönsch, Torsten W. Kuhlen, Sabine J. Schlittmeier, and Janina Fels has just been published in PLOS ONE.

Virtual reality is increasingly used in research to simulate realistic environments – possibly increasing ecological validity. However, the visual component in virtual reality can affect participants, especially if there are incongruencies between auditory and visual information. In this study, we investigated verbal short-term memory under two types of audio-visual incongruencies: an angle incongruence, where the perceived position of the sound source did not match that of the visual representation, and a voice incongruence, where two virtual agents switched voices. The task was presented either on a computer screen or in virtual reality. We found no effect of the incongruencies or the display modality (computer screen vs. virtual reality), highlighting the complexity of audio-visual interaction.

This research is part of the priority program AUDICTIVE and was a cooperation with researchers from the Visual Computing Institute and the Work and Engineering Psychology at RWTH Aachen University.