We are happy to announce that our paper Audiovisual angle and voice incongruence do not affect audiovisual verbal short-term memory in virtual reality by Cosima A. Ermert, Manuj Yadav, Jonathan Ehret, Chinthusa Mohanathasan, Andrea Bönsch, Torsten W. Kuhlen, Sabine J. Schlittmeier, and Janina Fels has just been published in PLOS ONE.

We are happy to announce that our paper Audiovisual angle and voice incongruence do not affect audiovisual verbal short-term memory in virtual reality by Cosima A. Ermert, Manuj Yadav, Jonathan Ehret, Chinthusa Mohanathasan, Andrea Bönsch, Torsten W. Kuhlen, Sabine J. Schlittmeier, and Janina Fels has just been published in PLOS ONE.

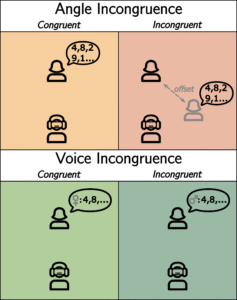

Virtual reality is increasingly used in research to simulate realistic environments – possibly increasing ecological validity. However, the visual component in virtual reality can affect participants, especially if there are incongruencies between auditory and visual information. In this study, we investigated verbal short-term memory under two types of audio-visual incongruencies: an angle incongruence, where the perceived position of the sound source did not match that of the visual representation, and a voice incongruence, where two virtual agents switched voices. The task was presented either on a computer screen or in virtual reality. We found no effect of the incongruencies or the display modality (computer screen vs. virtual reality), highlighting the complexity of audio-visual interaction.

This research is part of the priority program AUDICTIVE and was a cooperation with researchers from the Visual Computing Institute and the Work and Engineering Psychology at RWTH Aachen University.